Assistant Professor

School of Intelligence Science and Technology

Peking University

Email: libin.liu [at] pku.edu.cn

I am an assistant professor at the

School of Intelligence Science and Technology,

Peking University.

Before joining Peking University, I was the Chief Scientist of DeepMotion Inc.

I was a postdoctoral research fellow at

Disney Research

and

the University of British Columbia.

I received my Ph.D. in computer science and B.S. degree in mathematics and physics from

Tsinghua University.

I am interested in character animation, physics-based simulation, motion control, and related areas such as

reinforcement learning, deep learning, and robotics. I put a lot of work into realizing various agile human motions

on simulated characters and robots.

- 2026/02/06: I am now serving as an Associate Editor for ACM Transactions on Graphics (TOG)

- 2025/12/16: one paper accepted to Eurographics 2026

- 2025/08/11: two papers accepted to ACM SIGGRAPH Asia 2025

- 2025/07/06: one paper accepted to ACM Multimedia 2025

- 2025/03/11: I am invited to give a talk on The 1st Workshop on Humanoid Agents at CVPR 2025

- 2024/07/30: one paper accepted to ACM SIGGRAPH Asia 2024

- 2024/04/30: two papers accepted to ACM SIGGRAPH 2024

- 2024/02/14: one paper accepted to Eurographics 2024

- 2024/01/27: I am now serving as an Associate Editor for IEEE Transactions on Visualization and Computer Graphics (TVCG)

- 2023/07/06: our paper GestureDiffuCLIP: Gesture Diffusion Model with CLIP Latents received SIGGRAPH 2023 Honorable Mention Papers [news]

- 2023/05/20: I am invited to give a talk on workshop Toward Natural Motion Generation at RSS 2023

- 2023/05/05: one paper accepted to SIGGRAPH 2023

- 2022/12/06: our paper Rhythmic Gesticulator: Rhythm-Aware Co-Speech Gesture Synthesis with Hierarchical Neural Embeddings received SIGGRAPH Asia 2022 Best Paper Award

DexterCap: An Affordable and Automated System for Capturing Dexterous Hand-Object Manipulation

We introduce DexterCap, a robust, low-cost, and high-fidelity motion capture hardware system that captures complex, fine-grained hand–object interactions. Using this system, we create the DexterHand dataset, which includes subtle, fine-grained manipulation behaviors and interactions with objects such as a Rubik’s Cube.

Eurographics 2026.

[Project Page] [Paper] [Code] [Interactive Demo] [Dataset (coming soon...)]

We introduce SRBTrack, a terrain-adaptive motion tracking framework for virtual characters. By combining a single-rigid-body controller with momentum-mapped space-time optimization, it enables robust, real-time, and physically plausible full-body motions. Trained on unstructured data, SRBTrack generalizes across unseen terrains, disturbances, and versatile motion styles without retraining.

ACM SIGGRAPH Asia 2025 Conference Track.

ACM Transactions on Graphics, Vol 43 Issue 6 (SIGGRAPH Asia 2024).

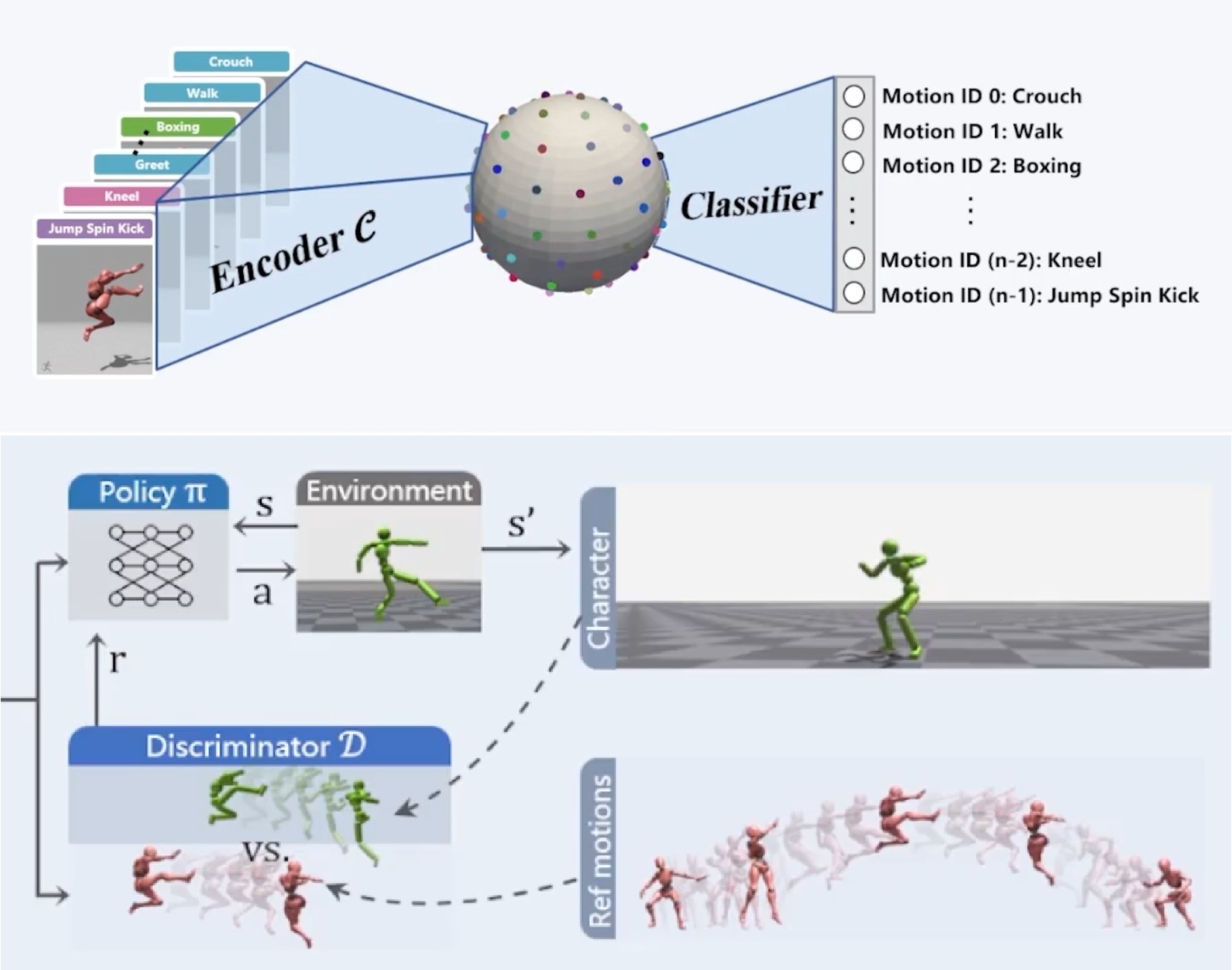

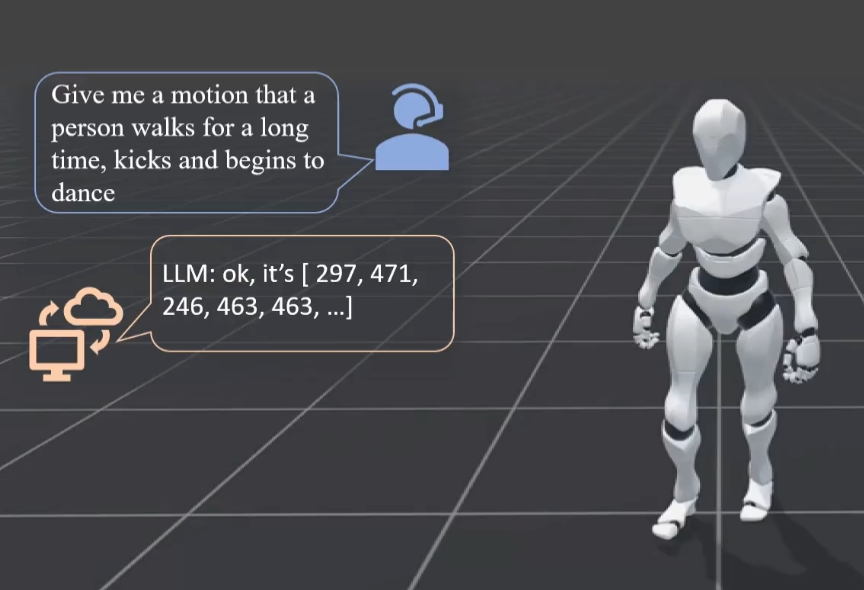

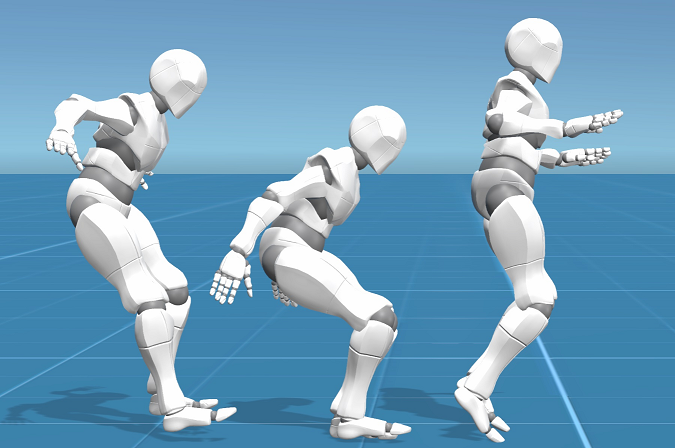

MoConVQ: Unified Physics-Based Motion Control via Scalable Discrete Representations

We present MoConVQ, a uniform framework enabling simulated avatars to acquire diverse skills from large, unstructured datasets. Leveraging a rich and scalable discrete skill representation, MoConVQ supports a broad range of applications, including pose estimation, interactive control, text-to-motion generation, and, more interestingly, integrating motion generation with Large Language Models (LLMs).

ACM Transactions on Graphics, Vol 43 Issue 4, Article 144 (SIGGRAPH 2024).

[Project Page] [Paper] [Code]

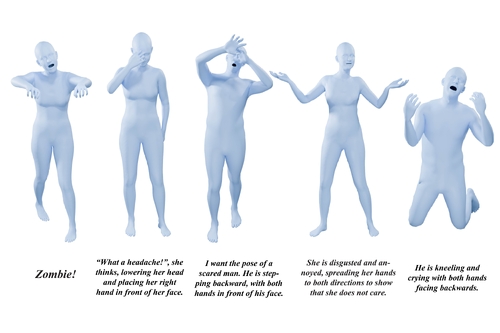

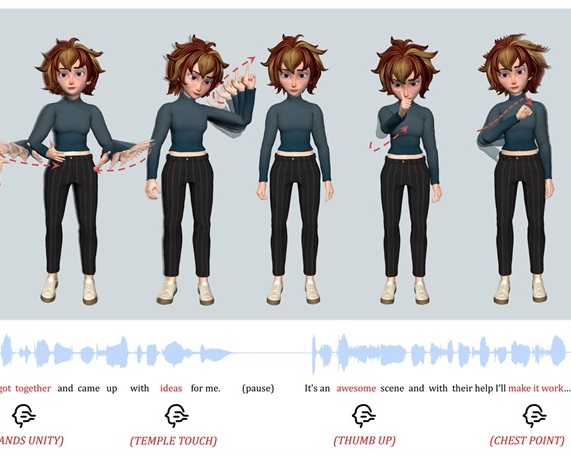

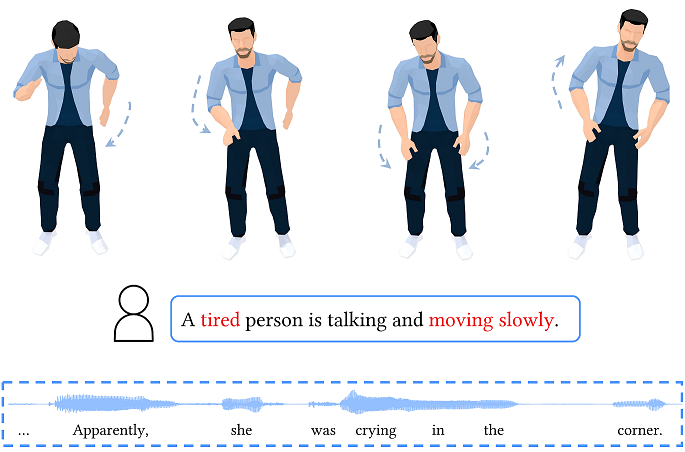

Semantic Gesticulator: Semantics-aware Co-speech Gesture Synthesis

We introduce Semantic Gesticulator, a novel framework designed to synthesize realistic co-speech gestures with strong semantic correspondence. Semantic Gesticulator fine-tunes an LLM to retrieve suitable semantic gesture candidates from a motion library. Combined with a novel, GPT-style generative model, the generated gesture motions demonstrate strong rhythmic coherence and semantic appropriateness.

ACM Transactions on Graphics, Vol 43 Issue 4, Article 136 (SIGGRAPH 2024).

[Project Page] [Paper] [Video (YouTube)] [Dataset] [Code]

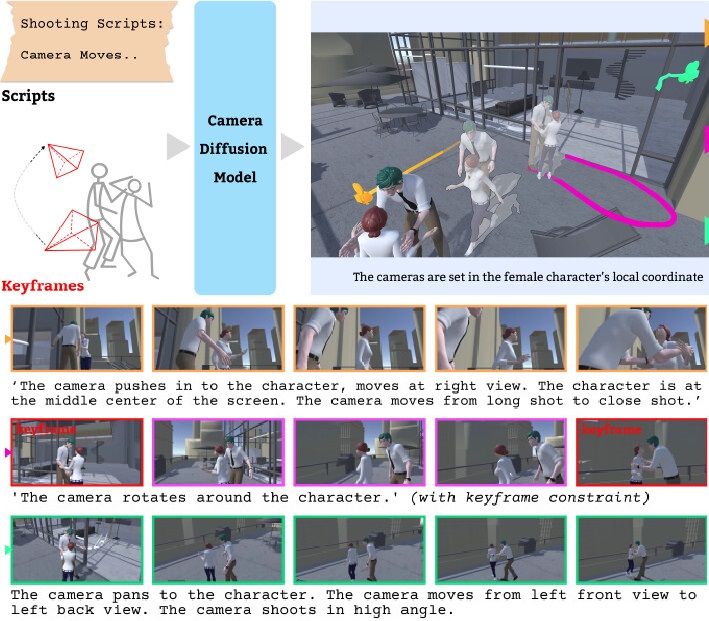

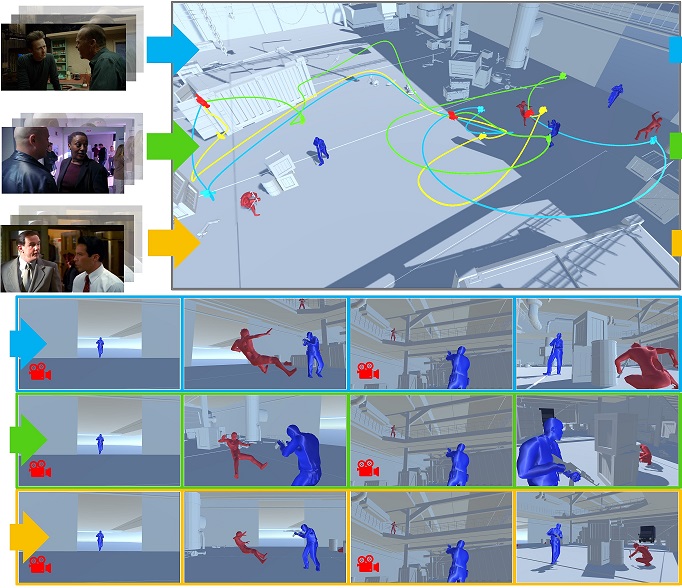

Cinematographic Camera Diffusion Model

We present a cinematographic camera diffusion model using a transformer-based architecture to handle temporality and exploit the stochasticity of diffusion models to generate diverse and qualitative trajectories conditioned by high-level textual descriptions.

Computer Graphics Forum, Vol 43 Issue 2 (Eurographics 2024).

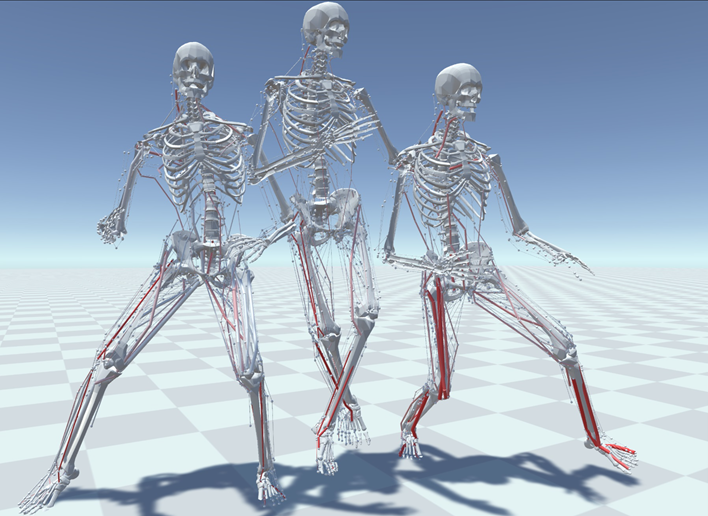

MuscleVAE: Model-Based Controllers of Muscle-Actuated Characters

We present a novel framework for simulating and controlling muscle-actuated characters. This framework generates biologically plausible motion and accounts for fatigue effects using model-based generative controllers.

ACM SIGGRAPH Asia 2023 Conference Track.

[Project Page] [Paper] [Video (YouTube|BiliBili)] [Code]

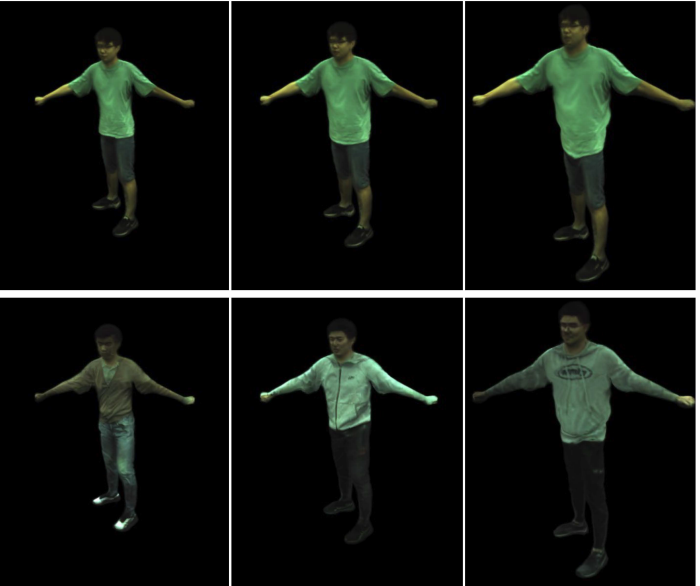

Neural Novel Actor: Learning a Generalized Animatable Neural Representation for Human Actors

We propose a new method for learning a generalized animatable neural human representation from a sparse set of multi-view imagery of multiple persons.

IEEE Transactions on Visualization and Computer Graphics, 2023

[Project Page] [Paper] [Video] [Code]

MotionBERT: A Unified Perspective on Learning Human Motion Representations

We present a unified perspective on tackling various human-centric video tasks by learning human motion representations from large-scale and heterogeneous data resources.

ICCV 2023

[Project Page] [Paper]

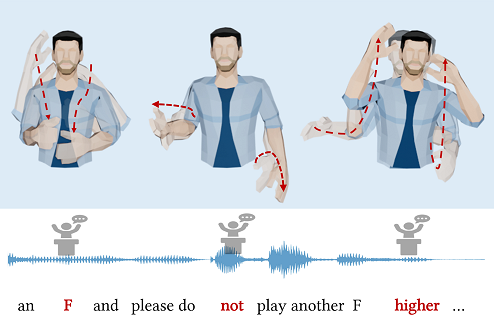

GestureDiffuCLIP: Gesture Diffusion Model with CLIP Latents

We introduce GestureDiffuCLIP, a CLIP-guided, co-speech gesture synthesis system that creates stylized gestures in harmony with speech semantics and rhythm using arbitrary style prompts. Our highly adaptable system supports style prompts in the form of short texts, motion sequences, or video clips and provides body part-specific style control.

ACM Transactions on Graphics, Vol 42 Issue 4, Article 40 (SIGGRAPH 2023). (SIGGRAPH 2023 Honorable Mention Award [news])

[Project Page] [Paper] [Video (YouTube|BiliBili)]

Control VAE: Model-Based Learning of Generative Controllers for Physics-Based Characters

We introduce Control VAE, a novel model-based framework for learning generative motion control policies, which allows high-level task policies to reuse various skills to accomplish downstream control tasks.

ACM Transactions on Graphics, Vol 41 Issue 6, Article 183 (SIGGRAPH Asia 2022).

[Project Page] [Paper] [Video (YouTube|BiliBili)] [Code]

Rhythmic Gesticulator: Rhythm-Aware Co-Speech Gesture Synthesis with Hierarchical Neural Embeddings

We present a novel co-speech gesture synthesis method that achieves convincing results both on the rhythm and semantics.

ACM Transactions on Graphics, Vol 41 Issue 6, Article 209 (SIGGRAPH Asia 2022). (SIGGRAPH Asia 2022 Best Paper Award)

[Project Page] [Paper] [Video (YouTube|BiliBili)] [Code] [Explained (YouTube (English)|知乎 (Chinese))]

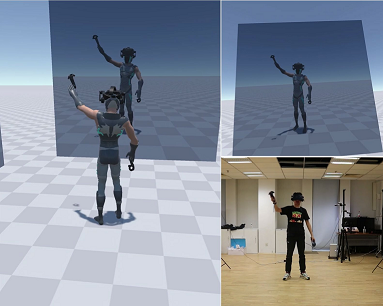

Neural3Points: Learning to Generate Physically Realistic Full-body Motion for Virtual Reality Users

We present a method for real-time full-body tracking using three VR trackers provided by a typical VR system: one HMD (head-mounted display) and two hand-held controllers.

Computer Graphics Forum, Vol 41 Issue 8, Page 183-194 (SCA 2022).

[Project Page] [Paper] [Video (YouTube|BiliBili)]

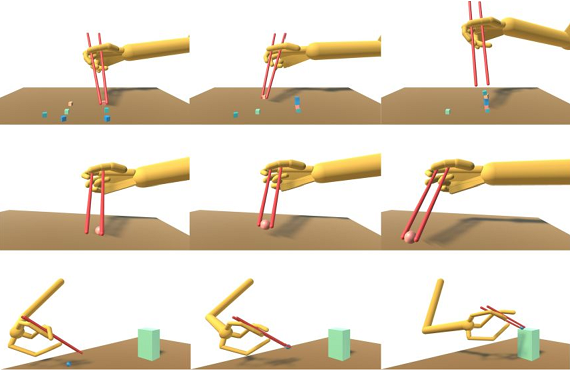

Learning to Use Chopsticks in Diverse Gripping Styles

We propose a physics-based learning and control framework for using chopsticks. Robust hand controls for multiple hand morphologies and holding positions are first learned through Bayesian optimization and deep reinforcement learning. For tasks such as object relocation, the low-level controllers track collision-free trajectories synthesized by a high-level motion planner.

ACM Transactions on Graphics, Vol 41 Issue 4, Article 95 (SIGGRAPH 2022).

[Project Page] [Paper] [Video (YouTube|BiliBili)] [Code]

Camera Keyframing with Style and Control

We present a tool that enables artists to synthesize camera motions following a learned camera behavior while enforcing user-designed keyframes as constraints along the sequence.

ACM Transactions on Graphics, Vol 40 Issue 6, Article 209 (SIGGRAPH Asia 2021).

[Project Page] [Paper 20.1MB] [Video] [Code]

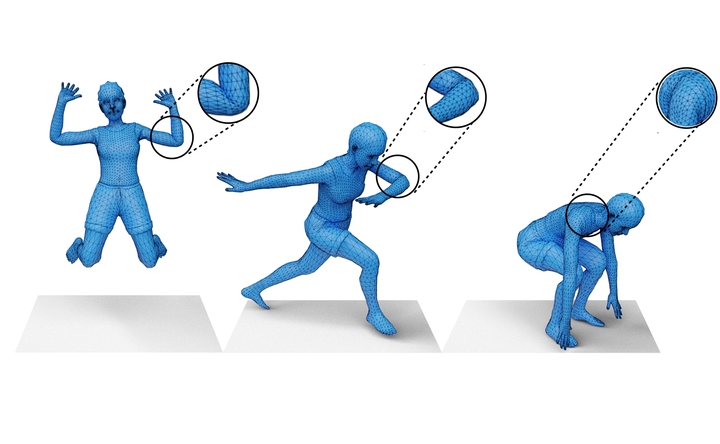

Learning Skeletal Articulations With Neural Blend Shapes

We present a technique for articulating 3D characters with pre-defined skeletal structure and high-quality deformation, using neural blend shapes — corrective, pose-dependent, shapes that improve deformation quality in joint regions.

ACM Transactions on Graphics, Vol 40 Issue 4, Article 130 (SIGGRAPH 2021).

[Project Page] [Paper] [Video] [Code]

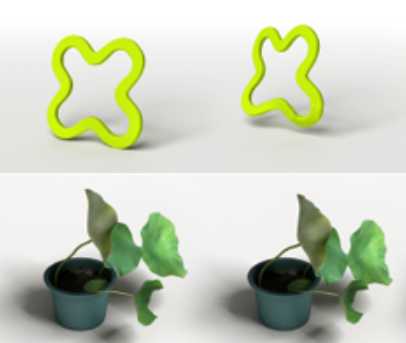

Unsupervised Co-part Segmentation through Assembly

We propose an unsupervised learning approach for co-part segmentation from images.

Proceedings of the 38th International Conference on Machine Learning (ICML),

PMLR 139:3576-3586, 2021.

[Project Page] [Paper 3.2MB] [Supplementary 5.4MB] [Video 28MB] [Code]

Learning Basketball Dribbling Skills Using Trajectory Optimization and Deep Reinforcement Learning

We present a method based on trajectory optimization and deep reinforcement learning for learning robust controllers for various basketball dribbling skills, such as dribbling between the legs, running, and crossovers.

ACM Transactions on Graphics, Vol 37 Issue 4, Article 142 (SIGGRAPH 2018).

[Project Page] [Paper 6.7MB] [Video 123MB]

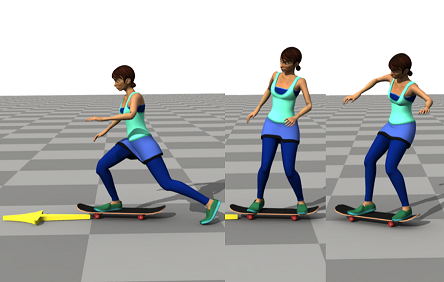

Learning to Schedule Control Fragments for Physics-Based Characters Using Deep Q-Learning

We present a deep Q-learning based method for learning a scheduling scheme that reorders short control fragments as necessary at runtime to achieve robust control of challenging skills such as skateboarding.

ACM Transactions on Graphics, Vol 36 Issue 3, Article 29. (presented at SIGGRAPH 2017)

[Project Page] [Paper 1.5MB] [Video 157MB]

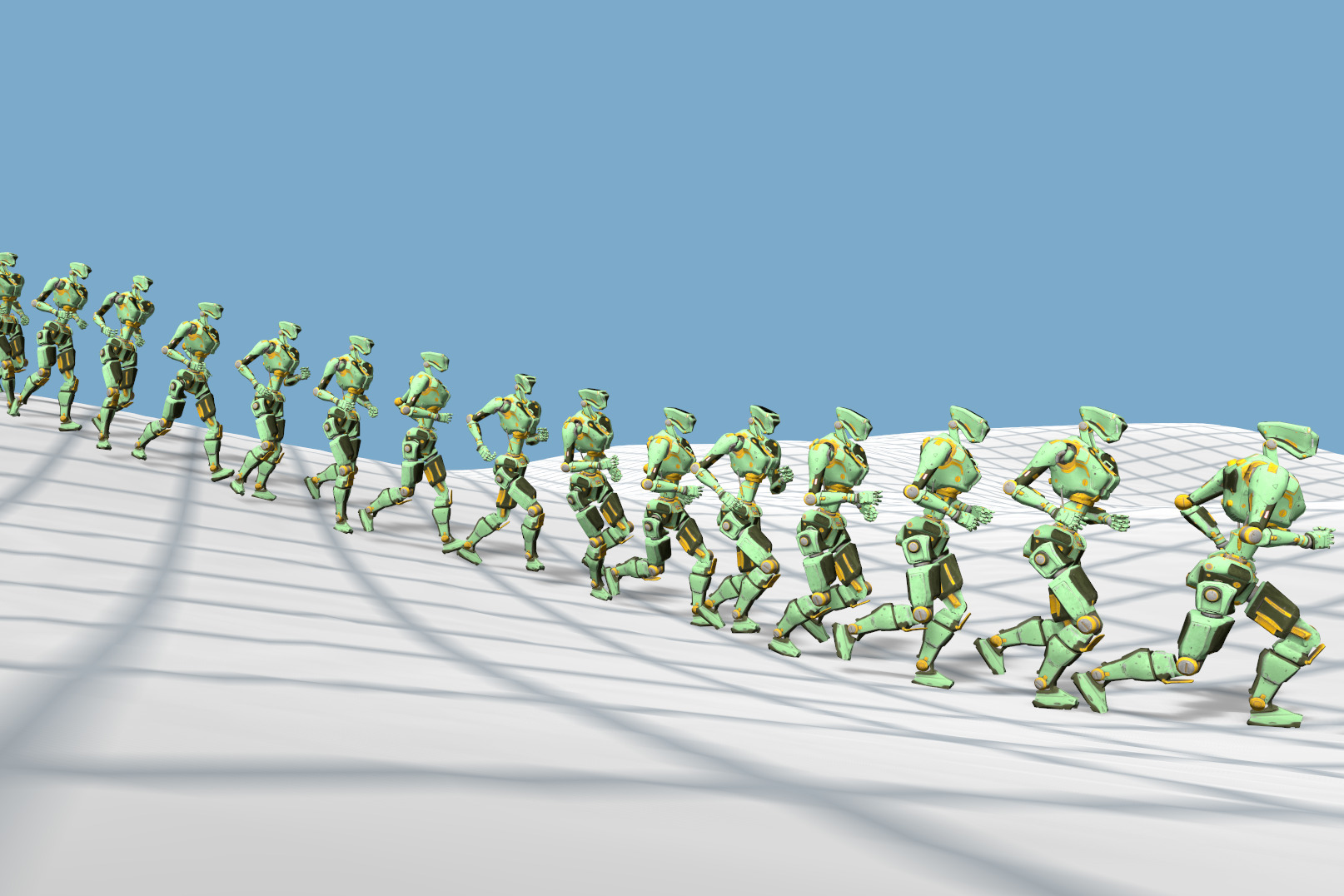

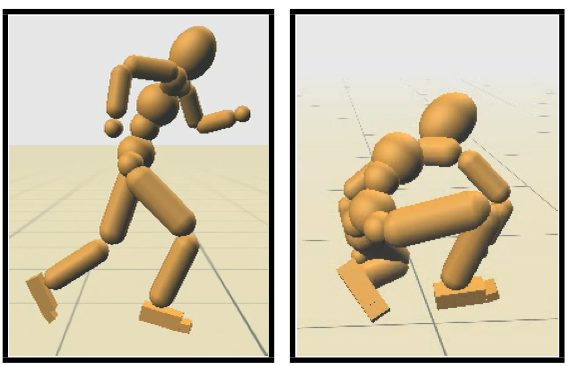

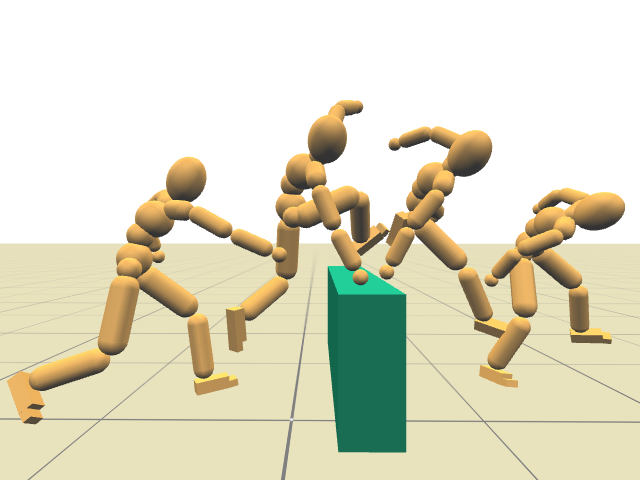

Guided Learning of Control Graphs for Physics-Based Characters

We present a method for learning robust control graphs that support real-time physics-based simulation of multiple characters, each capable of a diverse range of movement skills.

ACM Transactions on Graphics, Vol 35, Issue 2, Article 29. (presented at SIGGRAPH 2016)

[Project Page] [Paper 8.9MB] [Video 117MB] [Slides 1.6MB]

Learning Reduced-Order Feedback Policies for Motion Skills

We introduce a method for learning low-dimensional linear feedback strategies for the control of physics-based animated characters around a given reference trajectory.

Proc. ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2015 (SCA Best Paper Award)

[Project Page] [Paper 2.5MB]

Deformation Capture and Modeling of Soft Objects

We present a data-driven method for deformation capture and modeling of general soft objects.

ACM Transactions on Graphics, Vol 34, Issue 4, Article 94 (SIGGRAPH 2015)

[Project Page] [Paper 70MB]

Improving Sampling-based Motion Control

We address several limitations of the sampling-based motion control method. A variety of highly agile motions, ranging from stylized walking and dancing to gymnastic and Martial Arts routines, can be easily reconstructed now.

Computer Graphics Forum 34(2) (Eurographics 2015).

[Project Page] [Paper 3.8MB] [Video 59.8MB]

Simulation and Control of Skeleton-driven Soft Body Characters

We present a physics-based framework for simulation and control of human-like skeleton-driven soft body characters. We propose a novel pose-based plasticity model to achieve large skin deformation around joints. We further reconstruct controls from reference trajectories captured from human subjects by augmenting a sampling-based algorithm.

ACM Transactions on Graphics, Vol 32, Issue 6, Article 215 (SIGGRAPH Asia 2013)

[Project Page] [Paper 6.4MB] [Video 106MB] [Slides 5.4MB]

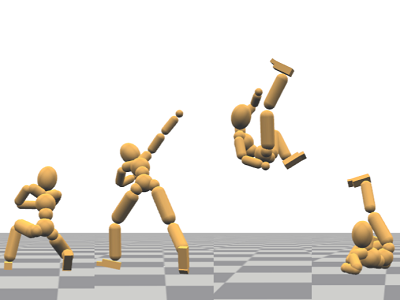

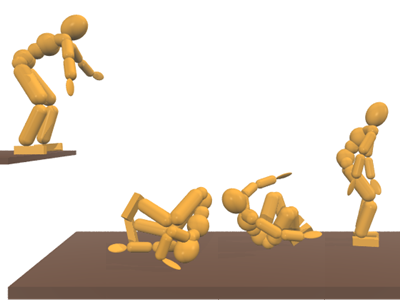

Terrain Runner: Control, Parameterization, Composition, and Planning for Highly Dynamic Motions

We present methods for the control, parameterization, composition, and planning for highly dynamic motions. More specifically, we learn the skills required by real-time physics-based avatars to perform parkour-style fast terrain crossing using a mix of running, jumping, speed-vaulting, and drop-rolling.

ACM Transactions on Graphics, Vol 31, Issue 6, Article 154 (SIGGRAPH Asia 2012)

[Project Page] [Paper 40MB] [Video: Full 103MB] [Slides 2.9MB]

Sampling-based Contact-rich Motion Control

Given a motion capture trajectory, we propose to extract its control by randomized sampling.

ACM Transactions on Graphics, Vol 29, Issue 4, Article 128 (SIGGRAPH 2010)

[Project Page] [Paper 6.8MB] [Video 44.3MB (with audio)] [Slides 1.6MB]

- 2025 - now: ACM Transactions on Graphics (TOG)

- 2024 - now: IEEE Transactions on Visualization and Computer Graphics (TVCG)

- ACM SIGGRAPH North America 2019, 2020, 2024, 2025

- ACM SIGGRAPH Asia 2022, 2023, 2026

- Eurographics 2024

- Pacific Graphics 2018, 2019, 2022, 2024

- SCA 2015-2019, 2021-2025

- MIG 2014, 2016-2019, 2022

- Eurographics Short Papers 2020, 2021

- SIGGRAPH Asia 2014 Posters and Technical Briefs

- CASA (Computer Animation and Social Agents) 2017, 2023

- Graphics Interface 2023

- CAD/Graphics 2017, 2019

- SIGGRAPH NA/Asia

- ACM Transactions on Graphics (TOG)

- IEEE Transactions on Pattern Analysis and Machine Intelligence Information (TPAMI)

- IEEE Transactions on Visualization and Computer Graphics (TVCG)

- International Conference on Computer Vision (ICCV)

- Eurographics (Eupopean Association for Computer Graphics)

- Pacific Graphics

- Computer Graphics Forum

- IEEE International Conference on Robotics and Automation (ICRA)

- ACM SIGGRAPH/Eurographics Symposium on Computer Animation (SCA)

- ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG)

- Computer Animation and Social Agents (CASA)

- Graphics Interface

- Computers & Graphics

- Graphical Models

Social Agent: Mastering Dyadic Nonverbal Behavior Generation via Conversational LLM Agents

Zeyi Zhang, Yanju Zhou, Heyuan Yao, Tenglong Ao, Xiaohang Zhan, Libin Liu†

Social Agent is a framework for generating realistic co-speech nonverbal behaviors in dyadic conversations. Combining an LLM-driven agentic system with a dual-person gesture generation model, it produces synchronized, context-aware gestures and adaptive interactions. User studies show improved naturalness, coordination, and responsiveness in conversational nonverbal behaviors.

ACM SIGGRAPH Asia 2025 Conference Track.

[Project Page] [Paper]